It is important that credit card companies are able to recognize fraudulent credit card transactions so that customers are not charged for items that they did not purchase.

The fraud usually happens when someone obtains your credit or debit card numbers through unprotected websites or through an identity theft scheme in order to get money or property fraudulently.

Because of the frequency with which it occurs and the potential harm it may bring to both individuals and financial institutions, it is critical to take preventative steps as well as recognize when a transaction is fraudulent.

Data-set

https://www.kaggle.com/mlg-ulb/creditcardfraud

Data-set can be downloaded from the above link.

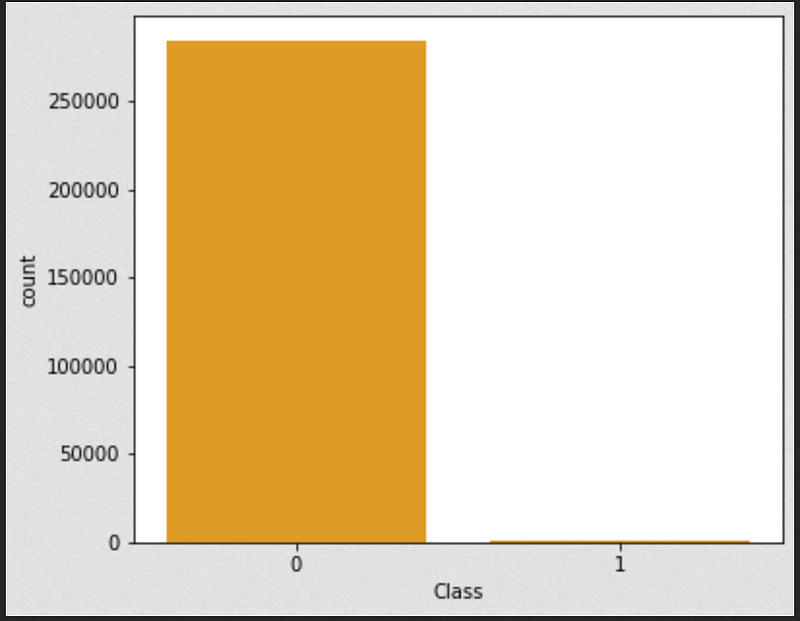

- 492 frauds out of 284,807 transactions

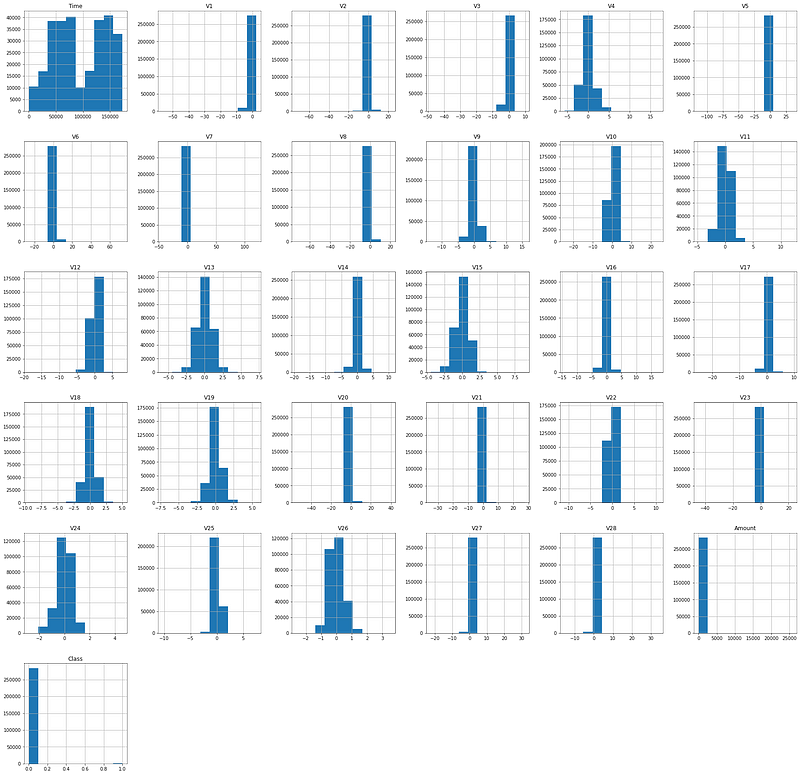

- features V1 — V28 are a result of the PCA transformation and are simply numerical representations

- “Amount” is the value in dollars of the transaction

- “Time” variable is the amount of time that passed from the time when the first transaction took place.

- Fraud = 1 , Not Fraud = 0

Importing data-set can be done through the following lines of code:

Data Visualization:

Count per class label is visualized as below:

Histograms of values of each column are displayed below:

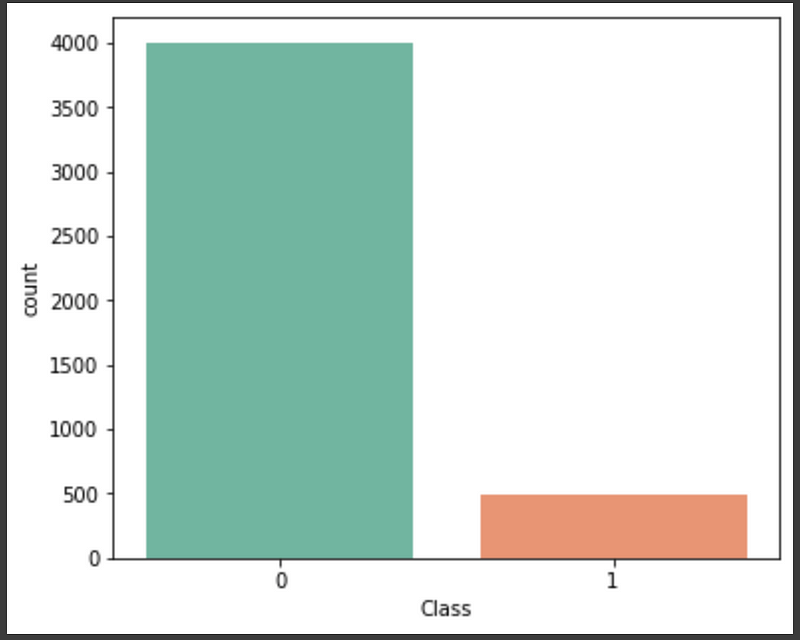

Balancing the Data-set

As the data-set is highly imbalanced, there is a need for us to balance it, in order to get classes to close proximity.

Standardization

We need to standardize the input data set as there are large differences between ranges of each feature.

Reshaping data

The data is reshaped into 3-Dimensional data

Model Building:

A CNN neural network is built as follows.

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv1d (Conv1D) (None, 29, 32) 96 _________________________________________________________________ dropout (Dropout) (None, 29, 32) 0 _________________________________________________________________ batch_normalization (BatchNo (None, 29, 32) 128 _________________________________________________________________ conv1d_1 (Conv1D) (None, 28, 64) 4160 _________________________________________________________________ dropout_1 (Dropout) (None, 28, 64) 0 _________________________________________________________________ flatten (Flatten) (None, 1792) 0 _________________________________________________________________ dropout_2 (Dropout) (None, 1792) 0 _________________________________________________________________ dense (Dense) (None, 64) 114752 _________________________________________________________________ dropout_3 (Dropout) (None, 64) 0 _________________________________________________________________ dense_1 (Dense) (None, 1) 65 ================================================================= Total params: 119,201 Trainable params: 119,137 Non-trainable params: 64 _________________________________________________________________

Model Training

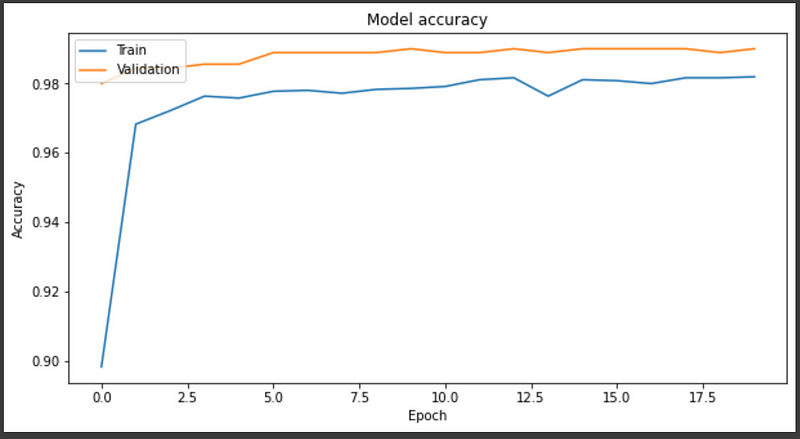

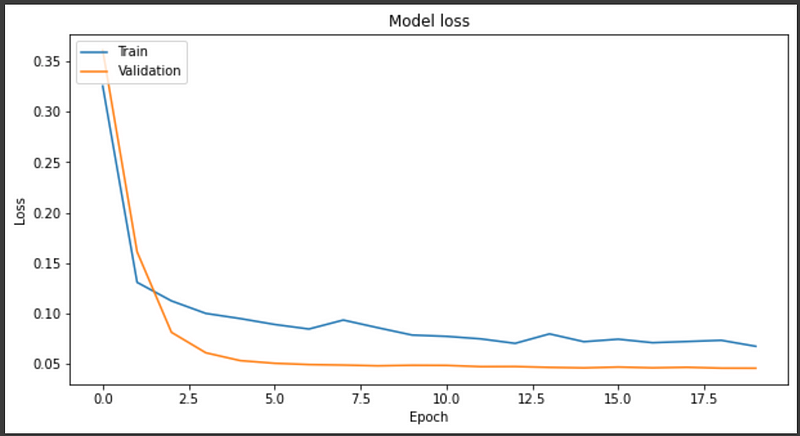

Model is fitted over scaled and reshaped data, with epochs = 20

Epoch 1/20 113/113 [==============================] - 0s 4ms/step - loss: 0.3254 - accuracy: 0.8984 - val_loss: 0.3610 - val_accuracy: 0.9800 Epoch 2/20 113/113 [==============================] - 0s 3ms/step - loss: 0.1309 - accuracy: 0.9683 - val_loss: 0.1611 - val_accuracy: 0.9844 Epoch 3/20 113/113 [==============================] - 0s 3ms/step - loss: 0.1125 - accuracy: 0.9722 - val_loss: 0.0813 - val_accuracy: 0.9844 Epoch 4/20 113/113 [==============================] - 0s 3ms/step - loss: 0.1001 - accuracy: 0.9763 - val_loss: 0.0610 - val_accuracy: 0.9855 Epoch 5/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0950 - accuracy: 0.9758 - val_loss: 0.0533 - val_accuracy: 0.9855 Epoch 6/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0891 - accuracy: 0.9777 - val_loss: 0.0506 - val_accuracy: 0.9889 Epoch 7/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0846 - accuracy: 0.9780 - val_loss: 0.0494 - val_accuracy: 0.9889 Epoch 8/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0935 - accuracy: 0.9772 - val_loss: 0.0489 - val_accuracy: 0.9889 Epoch 9/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0859 - accuracy: 0.9783 - val_loss: 0.0481 - val_accuracy: 0.9889 Epoch 10/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0786 - accuracy: 0.9786 - val_loss: 0.0487 - val_accuracy: 0.9900 Epoch 11/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0773 - accuracy: 0.9791 - val_loss: 0.0485 - val_accuracy: 0.9889 Epoch 12/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0748 - accuracy: 0.9811 - val_loss: 0.0473 - val_accuracy: 0.9889 Epoch 13/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0704 - accuracy: 0.9816 - val_loss: 0.0474 - val_accuracy: 0.9900 Epoch 14/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0798 - accuracy: 0.9763 - val_loss: 0.0466 - val_accuracy: 0.9889 Epoch 15/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0720 - accuracy: 0.9811 - val_loss: 0.0461 - val_accuracy: 0.9900 Epoch 16/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0745 - accuracy: 0.9808 - val_loss: 0.0469 - val_accuracy: 0.9900 Epoch 17/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0710 - accuracy: 0.9800 - val_loss: 0.0461 - val_accuracy: 0.9900 Epoch 18/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0722 - accuracy: 0.9816 - val_loss: 0.0467 - val_accuracy: 0.9900 Epoch 19/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0734 - accuracy: 0.9816 - val_loss: 0.0458 - val_accuracy: 0.9889 Epoch 20/20 113/113 [==============================] - 0s 3ms/step - loss: 0.0675 - accuracy: 0.9819 - val_loss: 0.0458 - val_accuracy: 0.9900

A training accuracy of ~98% is achieved with built network.

Epochs vs Accuracy graph is as plotted below for the considered model:

Epochs vs Loss graph is as plotted below:

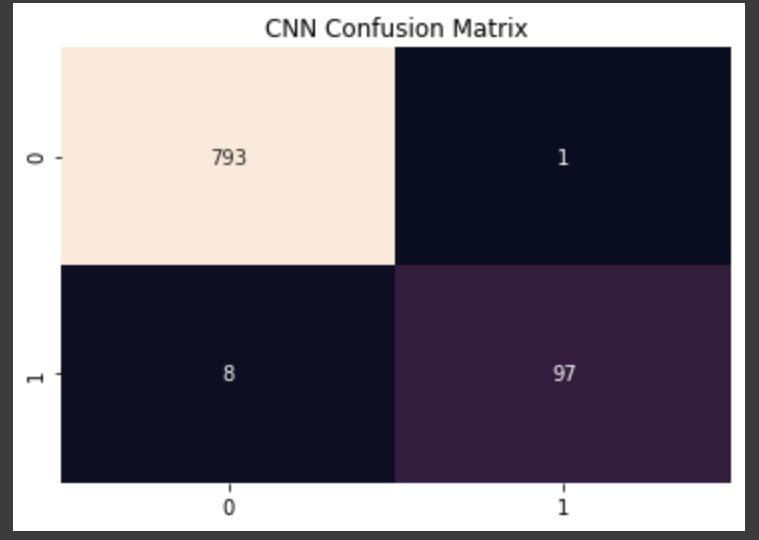

Evaluation:

0.9899888765294772

Test accuracy of ~99% is achieved.

Calculating other metrics:

precision: [0.99001248 0.98979592] recall: [0.99874055 0.92380952] fscore: [0.99435737 0.95566502] support: [794 105]

Conclusion:

A deep learning model to detect Credit card Fraud is built with an accuracy of ~98%.

Platform: cAInvas

Code: Here

Credit: Dheeraj Perumandla

Also Read: Grape Leaves Disease Detection