Crop weeds are weeds that grow amongst crops. Despite the potential for some crop weeds to be used as a food source, many can also prove harmful to crops, both directly and indirectly.

Crop weeds can inhibit the growth of crops, contaminate harvested crops and often spread rapidly. And hence it is necessary for the farmers to detect weed and remove them in order to protect their plants and crops.

Table of Content

- Introduction to cAInvas

- Source of Data

- Data Augmentation

- Creation of Dataset for Model Training

- Data Visualization

- Model Training

- Introduction to DeepC

- Compilation with DeepC

Introduction to cAInvas

cAInvas is an integrated development platform to create intelligent edge devices. Not only we can train our deep learning model using Tensorflow, Keras or Pytorch, we can also compile our model with its edge compiler called DeepC to deploy our working model on edge devices for production.

The Crop Weed Detection is also part of cAInvas gallery. All the dependencies which you will be needing for this project are also pre-installed.

cAInvas also offers various other deep learning notebooks in its gallery which one can use for reference or to gain insight about deep learning. It also has GPU support and which makes it the best in its kind.

Source of Data

While working on cAInvas one of its key features is UseCases Gallary. When working on any of its UseCases you don’t have to look for data manually. As they have the feature to import the dataset to your workspace when you work on them. To load the data we just have to enter the following commands:

Running the above command will load the labelled data in your workspace which you will use for model training.

Data Augmentation

Since the size of the data is small, we will have to increase the size of the dataset through augmentation so that our model can get as much data which is required for proper training. Augmentations which we will introduce are rotation range, width shift, height shift, shear range, brightness, horizontal flip, vertical flip.

Once done with the augmentations we will create a new directory which will store all the original image data as well as augmented data.For this we can run the following function:

Creation of Dataset for Model Training

Next step is to load the pixel values of image data as numpy arrays in a variable along with the target labels which we will pass into the model for training and we will use train-test split module from scikit learn for the preparation of train dataset and test dataset.

Data Visualization

Next step in the pipeline is to visualize and analyze our data so as to gain intuition about data and what we are dealing with. For this we printed out first 40 samples of data of each class from dataset. For this we can ran the following function:

Model Training

After creating the dataset next step is to pass our training data for our Deep Learning model to learn to identify or classify different classes of images. The model architecture used was:

Model: "functional_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 224, 224, 3)] 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 224, 224, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 224, 224, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 112, 112, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 112, 112, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 112, 112, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 56, 56, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 56, 56, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 56, 56, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 56, 56, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 28, 28, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 14, 14, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 7, 7, 512) 0 _________________________________________________________________ average_pooling2d (AveragePo (None, 3, 3, 512) 0 _________________________________________________________________ flatten (Flatten) (None, 4608) 0 _________________________________________________________________ dense (Dense) (None, 128) 589952 _________________________________________________________________ dropout (Dropout) (None, 128) 0 _________________________________________________________________ dense_1 (Dense) (None, 1) 129 ================================================================= Total params: 15,304,769 Trainable params: 590,081 Non-trainable params: 14,714,688 _________________________________________________________________

We have used a VGG-16 pretrained model and have applied transfer learning. We have modified the last dense layer of the VGG model according to our needs. We will not train the entire VGG model but only certain layers which we have added to our pre-existing model.

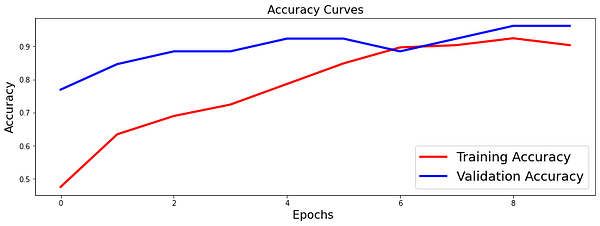

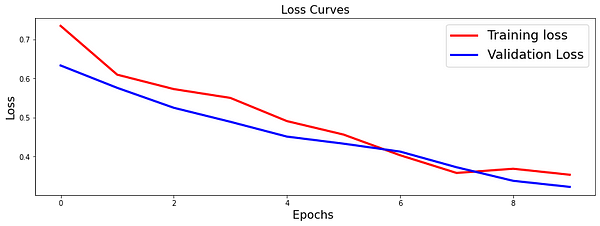

The loss function used was “binary_crossentropy” and optimizer used was “Adam”.For training the model we used Keras API with tensorflow at backend. The model showed good performance achieving a decent accuracy. Here are the training plots for the model:

Introduction to DeepC

DeepC Compiler and inference framework is designed to enable and perform deep learning neural networks by focussing on features of small form-factor devices like micro-controllers, eFPGAs, cpus and other embedded devices like raspberry-pi, odroid, arduino, SparkFun Edge, risc-V, mobile phones, x86 and arm laptops among others.

DeepC also offers ahead of time compiler producing optimized executable based on LLVM compiler tool chain specialized for deep neural networks with ONNX as front end.

Compilation with DeepC

After training the model, it was saved in an H5 format using Keras as it easily stores the weights and model configuration in a single file.

After saving the file in H5 format we can easily compile our model using DeepC compiler which comes as a part of cAInvas platform so that it converts our saved model to a format which can be easily deployed to edge devices. And all this can be done very easily using a simple command.

And that’s it, it is that easy to create a crop weed detection model which is also ready for deployment on edge devices.

Link for the cAInvas Notebook: https://cainvas.ai-tech.systems/use-cases/crop-weed-detection-app/

Credit: Ashish Arya

You may also be interested in

- Learning more about Grape Leaves Disease Detection

- Reading about Identifying insect bites — on cAInvas

- Finding out about Computer Vision, the technology enabling machines to interpret complex data and mimic human cognition

Become a Contributor: Write for AITS Publication Today! We’ll be happy to publish your latest article on data science, artificial intelligence, machine learning, deep learning, and other technology topics