Tag images with weather-related data using deep learning models.

Image tagging refers to labeling the different parts, components, and expressions in the image. This helps in selecting images based on content and is very useful in search engines and other similar applications.

As a manual process, image tagging is tedious. It is time-consuming and monotonous. With a higher number of images coming in continuously, tagging them manually creates a time lag that is impossible to overcome.

Automated tagging has predefined keywords with which images are associated. Deep learning models are trained to classify images into these categories. In most cases, this is a multi-label classification problem.

Here, we tag images based on the weather of the scene. There are two classes — cloudy, sunny.

Implementation of the idea on cAInvas — here!

The dataset

“Two-class Weather Classification” Cewu Lu, Di Lin, Jiaya Jia, Chi-Keung Tang IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2014

The dataset has 2 folders train and test, each with 2 sub-folders sunny and cloudy. The images are loaded using the image_dataset_from_directory() function of the keras.preprocessing module. The loaded images have shape (256, 256) with 3 channels (default parameter).

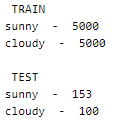

A peek into the spread of labels across the categories —

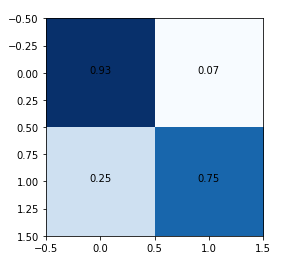

The train set is balanced while the test set is imbalanced. A confusion matrix can help in finding the actual accuracies in the test set.

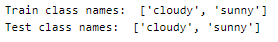

A peek into the class labels —

Visualization

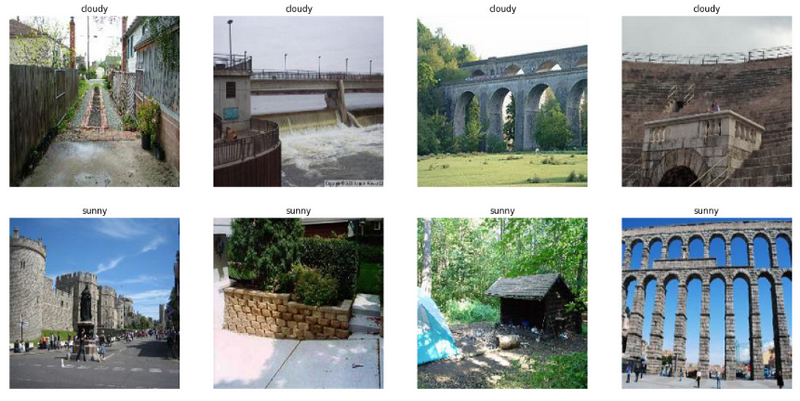

Let us look into a few images of the dataset —

Feel free to load more images and see the various images in the dataset.

It is important to note that the differences in the images are not very contrasting. In some cases, even humans may find it difficult to categorize them with high confidence.

Preprocessing

Normalization

The pixel values are now integers in the range 0–255. Normalizing them to hold float values in the range [0, 1]. This helps in faster convergence of the model. The Rescaling function of the keras.layers.experimental.preprocessing module is used.

The model

Transfer learning is the concept of using knowledge gained by a model on a different dataset to solve the problem at hand. The model structure, with or without pre-trained weights, can be used for the current training process.

We can also choose to keep the pre-trained weights constant while performing further training with the current dataset, i.e., train only the classification layer or train the entire network.

Here, the DenseNet model is used after removing the last layer (the classification layer) and attaching our own as necessary for the current problem. The model’s weights are kept intact while the layers we appended at the end will be trained.

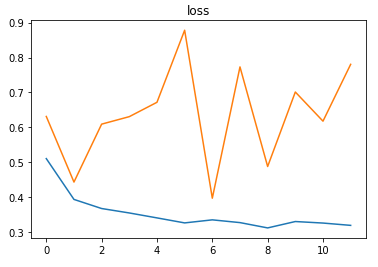

The model uses the binary cross-entropy loss as it is a two-class classification problem. Adam optimizer is used and the model’s accuracy metric is tracked to review performance.

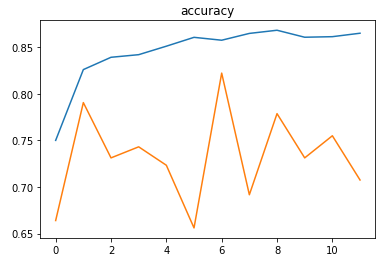

The EarlyStopping callback function of the keras.callbacks module is used to monitor the val_loss. This function stops the training if the validation loss doesn’t reduce continuously for 5 epochs (patience parameter).

The restore_best_weights parameter is set to true to ensure that the model is loaded with weights corresponding to the checkpoint with the lowest val_loss at the end of the training process.

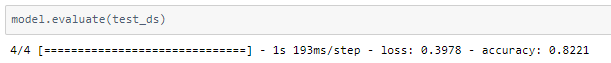

The model is trained with a learning rate of 0.01 and achieves ~82% accuracy on the test set.

The confusion matrix is as follows —

The metrics

Prediction

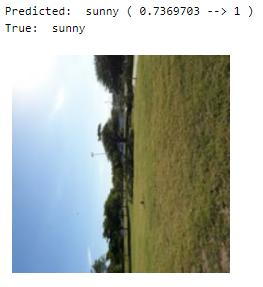

Let us look at a random image from the test set along with the model’s prediction for the same —

deepC

deepC library, compiler, and inference framework are designed to enable and perform deep learning neural networks by focussing on features of small form-factor devices like micro-controllers, eFPGAs, CPUs, and other embedded devices like raspberry-pi, odroid, Arduino, SparkFun Edge, RISC-V, mobile phones, x86 and arm laptops among others.

Compiling the model using deepC —

Head over to the cAInvas platform (link to notebook given earlier) to run and generate your own .exe file!

Credits: Ayisha D

Also Read: Pytorch Mnist Vision App for TinyML devices using cAInvas