Autoencoders can be used for increasing Image Resolution and this has proved to be effective when we want extract information from the feeds of Surveillance Cameras and autoencoders can also be used for Noise Reduction and they can serve as part of various IIoT Applications.

Table of Content

- Introduction to cAInvas

- Importing the Dataset

- Data Loader

- Model Training

- Introduction to DeepC

- Compilation with DeepC

Introduction to cAInvas

cAInvas is an integrated development platform to create intelligent edge devices. Not only we can train our deep learning model using Tensorflow, Keras, or Pytorch, we can also compile our model with its edge compiler called DeepC to deploy our working model on edge devices for production.

The Image Resolution Improvement model is also a part of cAInvas gallery. All the dependencies which you will be needing for this project are also pre-installed.

cAInvas also offers various other deep learning notebooks in its gallery which one can use for reference or to gain insight about deep learning. It also has GPU support and which makes it the best in its kind.

Importing the Dataset

While working on cAInvas one of its key features is UseCases Gallary. When working on any of its UseCases you don’t have to look for data manually. As they have the feature to import the dataset to your workspace when you work on them. To load the data we just have to enter the following commands:

Running the above command will load the data in your workspace which you will use for model training.

Data Loader

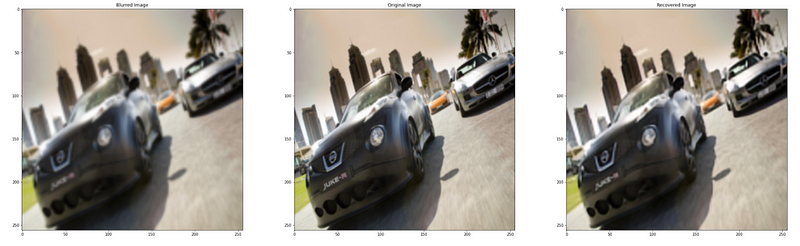

To load the data we will load all the image files on the folder of the dataset and we will load the images in the batches and for training we will pass low resolution images and train the model to produce high resolution images. For this we will load the high resolution images and we will blur the images and use this blurred images for training purpose.

Model Training

After creating the trainset and testset, next step is to pass our training data into our Deep Learning model to learn to produce the high resolution images from the low resolution images. The model architecture used was:

Model: "functional_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 256, 256, 3) 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 256, 256, 64) 1792 input_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 256, 256, 64) 36928 conv2d[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 128, 128, 64) 0 conv2d_1[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 128, 128, 64) 0 max_pooling2d[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 128, 128, 128 73856 dropout[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 128, 128, 128 147584 conv2d_2[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 64, 64, 128) 0 conv2d_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 64, 64, 256) 295168 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

up_sampling2d (UpSampling2D) (None, 128, 128, 256 0 conv2d_4[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 128, 128, 128 295040 up_sampling2d[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 128, 128, 128 147584 conv2d_5[0][0]

__________________________________________________________________________________________________

add (Add) (None, 128, 128, 128 0 conv2d_3[0][0]

conv2d_6[0][0]

__________________________________________________________________________________________________

up_sampling2d_1 (UpSampling2D) (None, 256, 256, 128 0 add[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 256, 256, 64) 73792 up_sampling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 256, 256, 64) 36928 conv2d_7[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 256, 256, 64) 0 conv2d_8[0][0]

conv2d_1[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 256, 256, 3) 1731 add_1[0][0]

==================================================================================================

Total params: 1,110,403

Trainable params: 1,110,403

Non-trainable params: 0

__________________________________________________________________________________________________

The loss function used was “MSE” and optimizer used was “Adam”.For training the model we used Keras API with tensorflow at backend. Here are some test results:

Introduction to DeepC

DeepC Compiler and inference framework is designed to enable and perform deep learning neural networks by focussing on features of small form-factor devices like micro-controllers, eFPGAs, cpus and other embedded devices like raspberry-pi, odroid, arduino, SparkFun Edge, risc-V, mobile phones, x86 and arm laptops among others.

DeepC also offers ahead of time compiler producing optimized executable based on LLVM compiler tool chain specialized for deep neural networks with ONNX as front end.

Compilation with DeepC

After training the model, it was saved in an H5 format using Keras as it easily stores the weights and model configuration in a single file.

After saving the file in H5 format we can easily compile our model using DeepC compiler which comes as a part of cAInvas platform so that it converts our saved model to a format which can be easily deployed to edge devices. And all this can be done very easily using a simple command.

And that’s it, our Image Resolution Improvement Model is trained and ready for deployment on edge devices.

Link for the cAInvas Notebook: https://cainvas.ai-tech.systems/use-cases/improving-image-resolution-with-autoencoders-app/

Credit: Ashish Arya

Also Read: Crop Weed Detection