Building a Deep Learning Model for Intent Classification

What is intent?

Intent is the aim behind a specific action or set of actions.

Aim

To develop a Deep Learning Model for Intent Classification using Python programming Language and Keras on Cainvas Platform.

Prerequisites

Before getting started, you should have a good understanding of:

- Python programming language

- Keras — Deep learning library

Dataset

The CSV file that we are going to use can be directly downloaded through this link: https://cainvas-static.s3.amazonaws.com/media/user_data/vomchaithany/train.csv

Get the data file

output:

--2021-07-11 14:09:45-- https://cainvas-static.s3.amazonaws.com/media/user_data/vomchaithany/train.csv Resolving cainvas-static.s3.amazonaws.com (cainvas-static.s3.amazonaws.com)... 52.219.66.48 Connecting to cainvas-static.s3.amazonaws.com (cainvas-static.s3.amazonaws.com)|52.219.66.48|:443... connected. HTTP request sent, awaiting response... 304 Not Modified File ‘train.csv’ not modified on server. Omitting download.

Import required libraries

Load the data

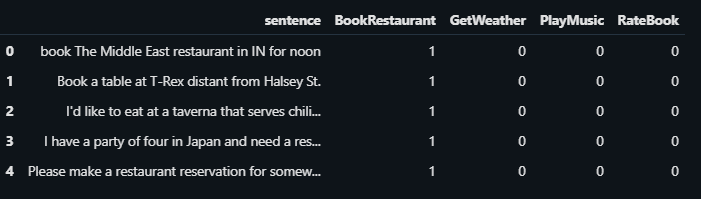

output:

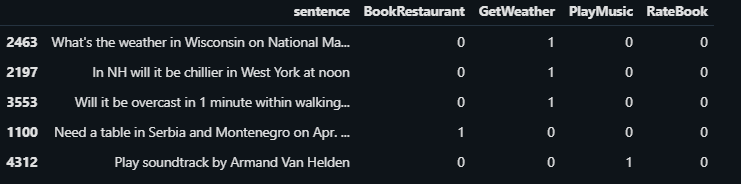

Let’s shuffle the data

output:

output:

((7929,), (7929, 4))

output:

(\'In NH will it be chillier in West York at noon\', array([0, 1, 0, 0]))

Preprocessing

output:

((7929,), (7929, 4))

output:

((5729, 4000), (2200, 4000))

output:

(array([[ 0, 0, 0, ..., 5, 11, 32],

[ 0, 0, 0, ..., 350, 977, 614],

[ 0, 0, 0, ..., 9, 4070, 2134],

...,

[ 0, 0, 0, ..., 4, 5446, 493],

[ 0, 0, 0, ..., 503, 562, 281],

[ 0, 0, 0, ..., 29, 4, 5447]], dtype=int32),

array([[ 0, 0, 0, ..., 5, 11, 32],

[ 0, 0, 0, ..., 350, 977, 614],

[ 0, 0, 0, ..., 9, 4070, 2134],

...,

[ 0, 0, 0, ..., 97, 195, 265],

[ 0, 0, 0, ..., 72, 5, 95],

[ 0, 0, 0, ..., 2294, 19, 10]], dtype=int32))Build and Train the Model

output:

Model: "sequential_9" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding_9 (Embedding) (None, 4000, 32) 240704 _________________________________________________________________ lstm_9 (LSTM) (None, 10) 1720 _________________________________________________________________ dropout_27 (Dropout) (None, 10) 0 _________________________________________________________________ dense_27 (Dense) (None, 800) 8800 _________________________________________________________________ dropout_28 (Dropout) (None, 800) 0 _________________________________________________________________ dense_28 (Dense) (None, 200) 160200 _________________________________________________________________ dropout_29 (Dropout) (None, 200) 0 _________________________________________________________________ dense_29 (Dense) (None, 4) 804 ================================================================= Total params: 412,228 Trainable params: 412,228 Non-trainable params: 0 _________________________________________________________________

output:

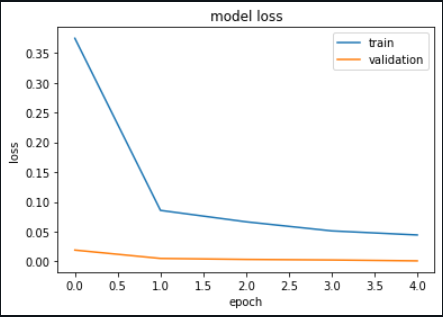

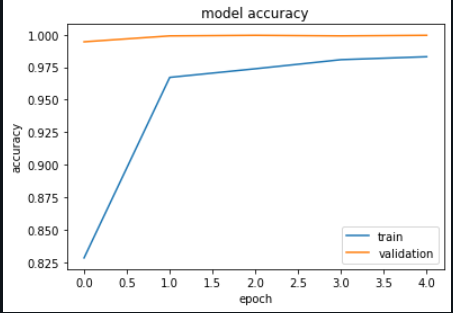

Epoch 1/5 180/180 [==============================] - 19s 106ms/step - loss: 0.3748 - accuracy: 0.8284 - val_loss: 0.0190 - val_accuracy: 0.9945 Epoch 2/5 180/180 [==============================] - 19s 103ms/step - loss: 0.0857 - accuracy: 0.9672 - val_loss: 0.0049 - val_accuracy: 0.9991 Epoch 3/5 180/180 [==============================] - 19s 103ms/step - loss: 0.0665 - accuracy: 0.9738 - val_loss: 0.0033 - val_accuracy: 0.9995 Epoch 4/5 180/180 [==============================] - 19s 103ms/step - loss: 0.0512 - accuracy: 0.9808 - val_loss: 0.0024 - val_accuracy: 0.9991 Epoch 5/5 180/180 [==============================] - 19s 103ms/step - loss: 0.0444 - accuracy: 0.9831 - val_loss: 8.6375e-04 - val_accuracy: 0.9995

Save the model

Plots

Loss vs Validation Loss

output:

Accuracy vs Validation Accuracy

output:

Loss and Accuracy

output:

69/69 [==============================] - 3s 41ms/step - loss: 8.6375e-04 - accuracy: 0.9995 [0.0008637462160550058, 0.9995454549789429]

Custom Predictions

output:

\'BookRestaurant\'

output:

\'GetWeather\'

output:

\'RateBook\'

output:

\'PlayMusic\'

Cainvas Gallery: https://cainvas.ai-tech.systems/gallery/

Conclusion

We’ve trained our LSTM model on the Intent Classification dataset and got an accuracy of 99%.

Notebook Link: Here

Credit: Om Chaithanya V

replicareview

You may also be interested in

- Learning more about Hate Speech and Offensive Language Detection

- Reading about Fingerprint pattern classification using Deep Learning

- Also Read: Neural Style Transfer

- Finding out about Deep Learning, the technology enabling machines to interpret complex data and mimic human cognition

Become a Contributor: Write for AITS Publication Today! We’ll be happy to publish your latest article on data science, artificial intelligence, machine learning, deep learning, and other technology topics