Agenda!

Among the deep learning methods, Long Short Term Memory (LSTM) networks are especially appealing to the predictive maintenance domain due to the fact that they are very good at learning from sequences. In this article, we try to build an LSTM network in order to predict the remaining useful life of aircraft engines. The network uses simulated aircraft sensor values to predict when an aircraft engine will fail in the future so that maintenance can be planned in advance.

The first line of code!

https://gist.github.com/938d0b753968e51215cea8d627242c15

Data Summary

In the Dataset directory, there are the training, test, and ground truth datasets. The training data consists of multiple multivariate time series with “cycle” as the time unit, together with 21 sensor readings for each cycle.

Each time series can be assumed as being generated from a different engine of the same type. The testing data has the same data schema as the training data. The only difference is that the data does not indicate when the failure occurs. Finally, the ground truth data provides the number of remaining working cycles for the engines in the testing data.

>Training Dataset

https://gist.github.com/6723ae2c6323256693999865b88641cf

>Test Dataset

https://gist.github.com/2e441cdd3cca258382c4b1d4f8276514

>Ground Truth Dataset

https://gist.github.com/3b8dbf82f316b9f2ed9b29cc409ed838

Data Preprocessing

In the data preprocessing part, we generate for the training data which are Remaining Useful Life (RUL), label1, and label2. Also“label1” is basically used for binary classification.

https://gist.github.com/08cc91984b9998709137d5d754496809

For creating the test data, we will first normalize the parameters from the MinMax normalization applied to the training data.

Next, we use the ground truth dataset to generate labels for the test data.

https://gist.github.com/f00c9128f9882282b692dae932bf79f6

Modeling

https://gist.github.com/2b9017538d39a2b65b4b2af1149029fa

LSTM layers expect input in the shape of a NumPy array of 3 dimensions (samples, time steps, features) where samples are the number of training sequences, time steps is the lookback window, or sequence length and features is the number of features of each sequence at each time step.

https://gist.github.com/5d1e279dcf4b655cf13685344f7c3932

https://gist.github.com/4d00646ee612fa3ad4c3f7118a73a665

The next obvious step is to write a function to generate labels!

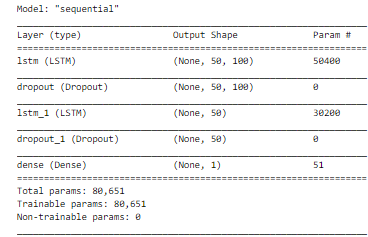

Creating LSTM Network

This is the time we build a deep network. The first layer is an LSTM layer with 100 units followed by another LSTM layer with 50 units. Dropout is also applied after each LSTM layer to control overfitting. The final layer is a Dense output layer with a single unit and sigmoid activation since this is a binary classification problem.

The model somewhat looks like :

Training the model

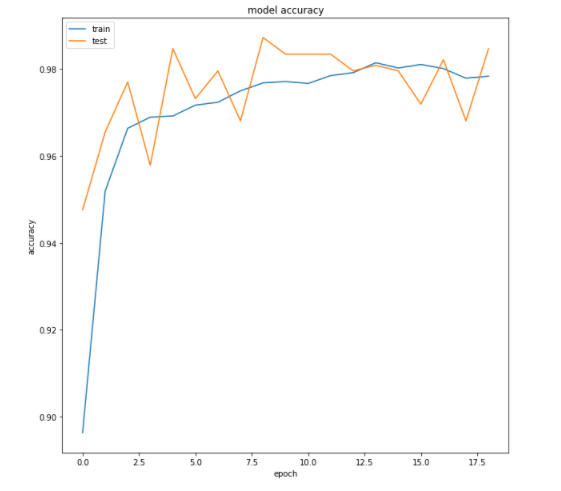

At this stage, we would train the Neural network for 100 Epochs with early stopping, monitoring the validation loss with the patience of 10.

The loss function specially used in this model is ‘binary_crossentropy’ along with an ‘Adam optimizer’ with it.

> Accuracy Curve for the model

Model testing

Finally, we look at the performance on the test data. Only the last cycle data for each engine id in the test data is kept for testing purposes.

https://gist.github.com/980c31828ed291c17624923eb0b7541e

We have to pick up labels and create test matrics in order to compute the test accuracy!

https://gist.github.com/ba14bde67dc15891f82956a1189e589d

The accuracy printed while testing the model is 96.77% approx.

Compiling with deepC:

To bring the saved model on MCU, install deepC — an open-source, vendor-independent deep learning library cum compiler and inference framework, for microcomputers and micro-controllers.

https://gist.github.com/9d9d5df4f3a7530d6b1e6aab4b15bbc5

Here’s the link to the complete notebook: https://cainvas.ai-tech.systems/use-cases/predictive-maintenance-using-lstm/

Credits: Umberto Griffo

Written by: Sanlap Dutta

Also Read: Checking the quality of manufactured goods — on cAInvas