Deepfake has been derived from “Deep Learning” which is a part of Machine Learning and involves the use of Artificial Neural Networks and the other word is “fake”. Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness.

While the act of faking content is not new, deepfakes leverage powerful techniques from Machine Learning and AI to manipulate or generate visual and audio content with a high potential to deceive.

Table Of Content

- Introduction

- Need of DeepFake Face Detection App

- Introduction to cAInvas

- Source of Data

- Data Visualization

- Model Training

- Introduction to DeepC

- Compilation with DeepC

Introduction

The main machine learning methods used to create deepfakes are based on deep learning and involve training generative neural network architectures, such as Autoencoders or Generative Adversarial Networks, or GANs for short.

Though these techniques were developed for a novel cause which was to increase the size of dataset for the Machine Learning and Deep Learning Scientists but soon after its development many people began to use this technique for criminal purposes such as:

- Using Deepfake to generate media of any political figure and spread fake news through it.

- Using Deepfake to make financial frauds.

- Using Deepfake to generate adult media by swapping the faces of persons.

and the list goes on like this.

Need of Deepfake Face Detection App

The criminal offenses mentioned above can affect many people’s life so it is very necessary to develop such DeepFake Face Detection Apps or Models so that these crimes can be identified and stopped.

Introduction to cAInvas

cAInvas is an integrated development platform to create intelligent edge devices. Not only we can train our deep learning model using Tensorflow, Keras, or Pytorch we can also compile our model with its edge compiler called DeepC to deploy our working model on edge devices for production.

The DeepFake Face Detection App which we are going to talk about, was developed in cAInvas and can be seen in their gallery.

Source of Data

While working in cAInvas one of its key features is UseCases Gallary. When working on any of its UseCases you don’t have to look for data manually. As they have the feature to import your dataset to your workspace when you work on them. To load the data we just have to enter the following commands:

And that’s it you will find the dataset loaded in your workspace.

Data Visualization

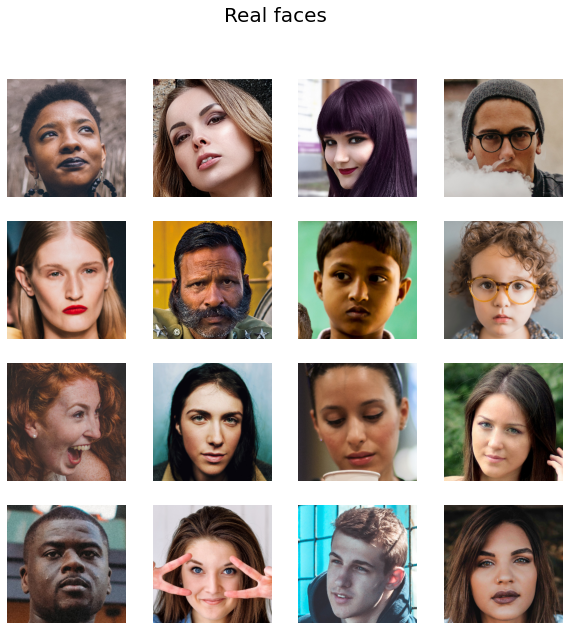

It’s always better to know what kind of data we are dealing with. The dataset provided at cAInvas contains both real faces and fake faces. Fake faces are further classified as easy and hard based on the difficulty to identify them as fake images. Some of the real faces in the dataset are:

Some of the fake faces in the dataset are:

Model Training

Once we have loaded the dataset through Keras’ Dataloader we are ready to initiate the training process of the DeepFake Face Detection Model. For our purpose and simplicity, we have used the technique of Transfer Learning where we used pre-trained model along with their weights and train them to classify according to our needs.

We use Transfer Learning because of several reasons:

- Model Accuracy attained is much higher than any custom architecture built from scratch.

- Model requires less time to train and produce good results.

- We don’t have to train the model from scratch rather model training starts from a particular base.

The pre-trained model used for our app was VGG-16 and we just replaced the last layer of the VGG-16 to cater to our needs.

Model: "sequential_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= vgg16 (Functional) (None, 7, 7, 512) 14714688 _________________________________________________________________ global_average_pooling2d (Gl (None, 512) 0 _________________________________________________________________ dense_3 (Dense) (None, 512) 262656 _________________________________________________________________ batch_normalization (BatchNo (None, 512) 2048 _________________________________________________________________ dense_4 (Dense) (None, 128) 65664 _________________________________________________________________ dense_5 (Dense) (None, 2) 258 ================================================================= Total params: 15,045,314 Trainable params: 329,602 Non-trainable params: 14,715,712 _________________________________________________________________

As you can see though the model has 15 million parameters we won’t be training all of them rather we will only be training 329k parameters.

The loss function used was “sparse_categorical_crossentropy” and optimizer used was “Adam”.For training the model we used Keras API with tensorflow at backend. The model showed good performance achieving a decent accuracy.

The model was trained for only 15 epochs and the accuracy achieved was:

6/6 [==============================] - 32s 5s/step - loss: 0.2334 - accuracy: 0.9458 Test loss: 0.23336732387542725 Test accuracy: 0.9457831382751465

Introduction to DeepC

DeepC Compiler and inference framework is designed to enable and perform deep learning neural networks by focusing on features of small form-factor devices like micro-controllers, eFPGAs, cpus, and other embedded devices like raspberry-pi, odroid, arduino, SparkFun Edge, risc-V, mobile phones, x86 and arm laptops among others.

DeepC also offers ahead of time compiler producing optimized executable based on LLVM compiler tool chain specialized for deep neural networks with ONNX as front end.

Compilation with DeepC

After training the model, it was saved in an H5 format using Keras as it easily stores the weights and model configuration in a single file.

After saving the file in H5 format we can easily compile our model using DeepC compiler which comes as a part of cAInvas platform so that it converts our saved model to a format which can be easily deployed to edge devices. And all this can be done very easily using a simple command.

And that’s all, it is that easy to build a DeepFake Face Detection App or any Deep Learning model with cAInvas.

Link for the cAInvas Notebook: https://cainvas.ai-tech.systems/use-cases/deepfake-face-detection-app/

Credit: Ashish Arya

Also Read: Object Detection using Yolo v3perfect replica rolex explorer ii