Classify frames into two categories — fire and not-fire using transfer learning.

Accidents on the road can sometimes lead to a fire that can get worse over time. Fires along the road due to other reasons are also be hazardous to the traffic on the road and nearby places. These fires need to be detected and controlled with utmost urgency in order to maintain the safety of those in the vicinity.

Human monitoring at all times is not possible. Waiting on passengers along the road to report the fire is also not a reliable process. Continuous monitoring is essential to ensure maximum safety.

In such open spaces, heat sensors will not work. Surveillance cameras along the roads can be used to monitor in real time and produce results using convolutional layers in the network.

Implementation of the idea on cAInvas — here!

The dataset

The zip file has 3 folders, train, validation, and test. Each of these folders has 2 sub-folders — Fire and Non-Fire containing respective images. A few of the images are artificially generated using graphical images of fires over non-fire images.

The images are loaded using the image_dataset_from_directory() function of the keras.preprocessing module. The default loaded image shape is (256, 256) with 3 channels.

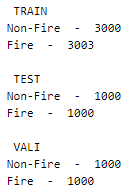

The spread of images across labels is as follows —

This is a balanced dataset.

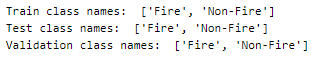

A peek into the class labels —

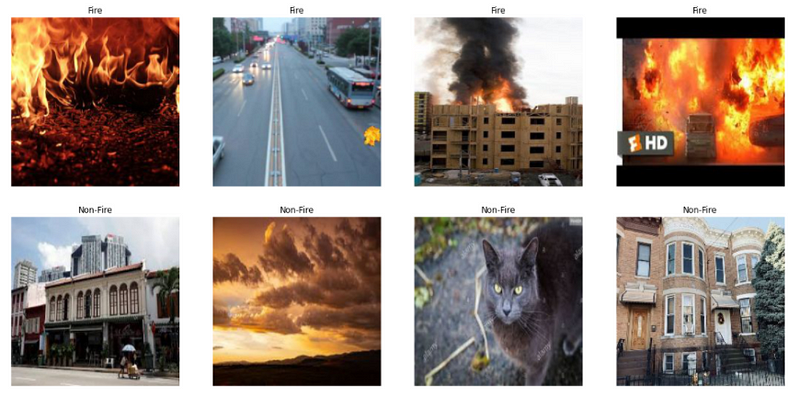

Visualization

Let us look into a few images of the dataset to gain some understanding of what we are working with —

The image at [0,1] is an example of an artificially generated one.

Preprocessing

Normalization

The pixel values are integers in the range of 0 to 255. Normalizing them to have values in the range [0, 1] helps in faster convergence. The Rescaling function of the keras.layers.experimental.preprocessing module is used.

Pre-fetch images into memory

Prefetching elements from the input dataset ahead of the time they are requested.

The model

Transfer learning is the concept of using a pre-trained model structure (and weight, optional) to solve the problem at hand. The model may be trained on datasets different from the current problem but the knowledge gained has proven to be effective in solving problems in domains different from the ones used for training.

Here, we will be using the Inception model after removing its last layer (the classification layer) and attaching our own as necessary for the current problem.

The model’s weights will be kept intact while the layers we appended at the end will be trained.

The model uses binary cross-entropy loss as it is a two-class classification problem. Adam optimizer was used and the model’s accuracy metric was tracked to review performance.

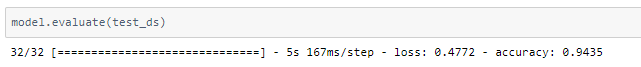

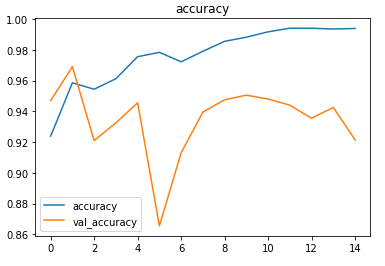

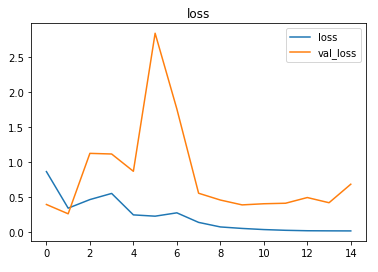

The model was trained first with a learning rate of 0.1 and then with a learning rate of 0.01. Around 93% accuracy was achieved on the test set.

The metrics

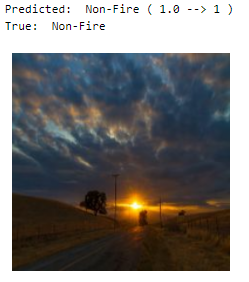

Prediction

Let’s look at the test image along with the model’s prediction —

deepC

deepC library, compiler, and inference framework are designed to enable and perform deep learning neural networks by focussing on features of small form-factor devices like micro-controllers, eFPGAs, CPUs, and other embedded devices like raspberry-pi, odroid, Arduino, SparkFun Edge, RISC-V, mobile phones, x86 and arm laptops among others.

Compiling the model using deepC —

Head over to the cAInvas platform (link to notebook given earlier) to run and generate your own .exe file!

Credits: Ayisha D

Also Read: Drug classification — on cAInvas