Detect cracks in concrete surfaces.

Concrete surface cracks are major defects in civil structures. Identifying them is an important part of the building inspection process where the rigidity and tensile strength of the building are evaluated.

Automating this process involves using a mobile bot with a camera input that scans the surfaces of the building for cracks and logs the locations for the same.

In order to make predictions on a larger surface, the camera is to be moved over the surface, covering it in small sections. The trained model is then applied to the image of this smaller section.

Implementation of the idea on cAInvas — here!

The dataset

Özgenel, Çağlar Fırat (2019), “Concrete Crack Images for Classification”, Mendeley Data, v2 Reference

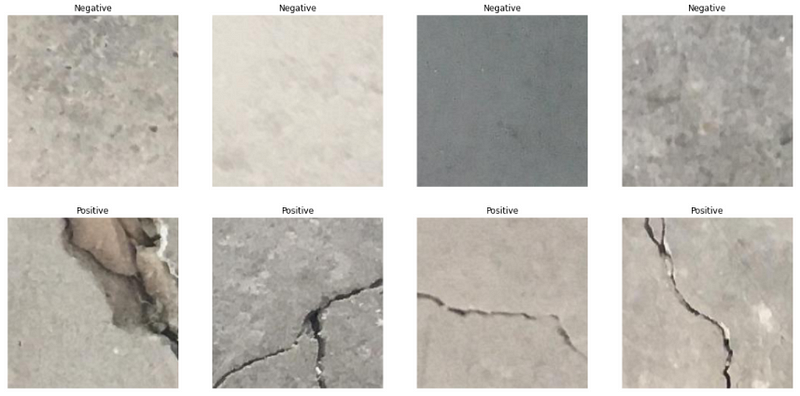

The dataset has 20000 images in each of two categories — with and without cracks on concrete surfaces without any augmentation techniques applied.

The dataset has two folders — negative and positive, each with their respective images.

The dataset is loaded using the image_dataset_from_directory function of the keras.preprocessing module by specifying the path to the train and test folders. The function parameter specifies that the labels are to be loaded in the binary mode (two values — 0/1, thus we will be using BinaryCrossentropy loss in our model later). Using an 80–20 (train-test) split on the images.

The training set has 32000 images while the test set has 8000 images.

The class labels are as follows —

Visualization

Let us look at examples from the dataset we are dealing with —

Preprocessing

Normalization

The pixel values of these images are integers in the range 0–255. Normalizing the pixel values reduces them to float values in the range [0, 1]. This helps in faster convergence of the model’s loss function. This is done using the Rescaling function of the keras.layers.experimental.preprocessing module.

The model

The model has two sets of Conv2D-Conv2d-MaxPool2D layers followed by the Flatten layer that reduces the values to a 1D array. This is then followed by 3 Dense layers, two with ReLU activation and the last with Sigmoid activation functions.

The model uses the binary cross-entropy loss of the keras.losses module as it is a classification problem with two class labels (loading the dataset using the label_mode as binary above). Adam optimizer of the keras.optimizers module was used and the model’s accuracy metric was tracked to review the model’s performance.

The EarlyStopping callback function of the keras.callbacks module is used to monitor the metrics (default, val_loss) and stop the training if the metric doesn’t improve (increase or decrease based on metric specified) continuously for 5 epochs (patience parameter).

The restore_best_weights parameter is set to true to ensure that the model is loaded with weights corresponding to the checkpoint with the best metric value at the end of the training process.

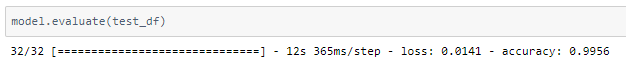

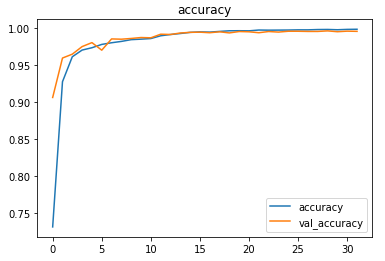

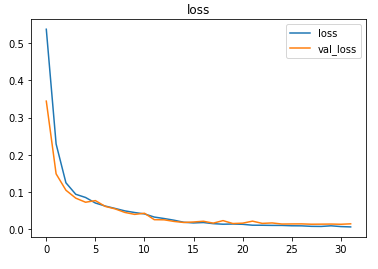

The model is trained with a learning rate of 0.0001 and achieves ~99.5% accuracy on the test set.

The metrics

Prediction

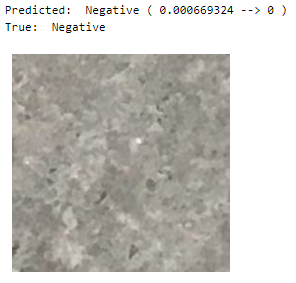

Let us look at a random image from the test set along with the model’s prediction for the same —

deepC

deepC library, compiler, and inference framework are designed to enable and perform deep learning neural networks by focussing on features of small form-factor devices like micro-controllers, eFPGAs, CPUs, and other embedded devices like raspberry-pi, odroid, Arduino, SparkFun Edge, RISC-V, mobile phones, x86 and arm laptops among others.

Compiling the model using deepC —

Head over to the cAInvas platform (link to notebook given earlier) to run and generate your own .exe file!

Credits: Ayisha D

Also Read: Gesture recognition using muscle activity — on cAInvas